How to Buy AI Automation Without Getting Burned: A Simple 3 Level Framework

Introduction: Why Buyers Are Getting Burned by AI Automation

If you feel confused by the phrase “AI automation,” you are not alone.

Right now, very different technologies are being sold under the same label. Some are rock solid and predictable. Others are flexible but risky. Buyers get burned not because AI does not work, but because they were never given a clear framework for choosing the right level of AI for the job.

Every buyer wants AI to move faster than humans.

No buyer wants to explain to their CEO why the AI broke something mission critical.

This article gives you a clear, practical framework for evaluating AI automation vendors without wasting budget or trust.

By the end, you will understand:

- The three distinct levels hidden inside “AI automation”

- How to choose the right level based on structure and risk

- How to evaluate AI vendors without taking unnecessary risk

Why “AI Automation” Is So Confusing Right Now

The AI market collapsed multiple concepts into a single buzzword.

Traditional automation, LLM powered workflows, and fully agentic systems behave very differently, yet many vendors present them as interchangeable. As a result, teams deploy overly complex systems where simple automation would have worked, or they introduce risk without understanding why.

Here is what happens in practice:

Sales teams automate follow up with tools that cannot handle messy replies, so deals stall.

Marketing teams deploy AI chatbots that qualify leads inconsistently, so reps stop trusting the data.

Operations teams over automate critical workflows that should never fail, introducing fragility where there should be none.

The problem is not that AI does not work.

The problem is that AI companies are selling you the wrong level of automation for the job.

The fastest way to regain clarity is to separate AI automation by structure vs risk.

The Three Levels of AI Automation

Level 1: Traditional Automation

Structured input to structured output

Zero tolerance for failure

Traditional automation is boring by design. And that is a compliment.

If the same input should always produce the same output, Traditional automation is unnecessary. This is the world of rules, triggers, and deterministic systems.

Examples include:

- Routing leads based on firmographic rules

- Updating CRM fields

- Sending confirmation or reminder emails

- Deterministic data transformations

As our co-founder explains:

“Traditional automation is great for things that can never break, where the input and the output are clearly defined. You should not be putting in AI agents. You should be using traditional automation.”

If failure is unacceptable, this is where you stop.

Any vendor pushing agentic AI agents at this level is creating risk without payoff.

Level 2: LLM Workflows

Unstructured input to structured output

Moderate risk with clear guardrails

LLM workflows sit in the middle, and this is where most revenue facing AI should live today.

Here, AI interprets messy human input but still lands in a controlled outcome. Conversations, emails, and form responses may vary wildly, but the system must still end in a known state such as qualified, routed, scheduled, or disqualified.

Examples include:

- Classifying inbound lead intent

- Determining which sales or marketing playbook to trigger

- Summarizing conversations into structured CRM notes

- Conversational lead qualification and routing

From the transcript:

“Generally, LLM workflows do very well for unstructured input to a structured output. Somebody talking to an LLM and an LLM coming up with a decision of what to go to next.”

The AI has freedom, but only at specific decision points. The outcome remains structured and auditable. This balance of flexibility and control is why LLM workflows are ideal for GTM use cases.

Level 3: Agentic Workflows

Unstructured input to unstructured output

High freedom and higher risk

Agentic systems operate with true autonomy.

There is no predefined path from A to B. The AI is given a goal rather than a process. It explores options, chooses routes dynamically, and may arrive at different outcomes each time.

Examples include:

- Exploratory research

- Internal experimentation

- Scenario modeling

- Open ended problem solving where discovery is the goal

Our co-founder describes it clearly:

“A truly agentic workflow is unstructured input to unstructured output. I do not have a defined A path to B path. I have an open-ended question.”

This level is powerful, but unpredictability is not a bug. It is the tradeoff. Agentic workflows do not belong in customer facing systems unless they are tightly contained.

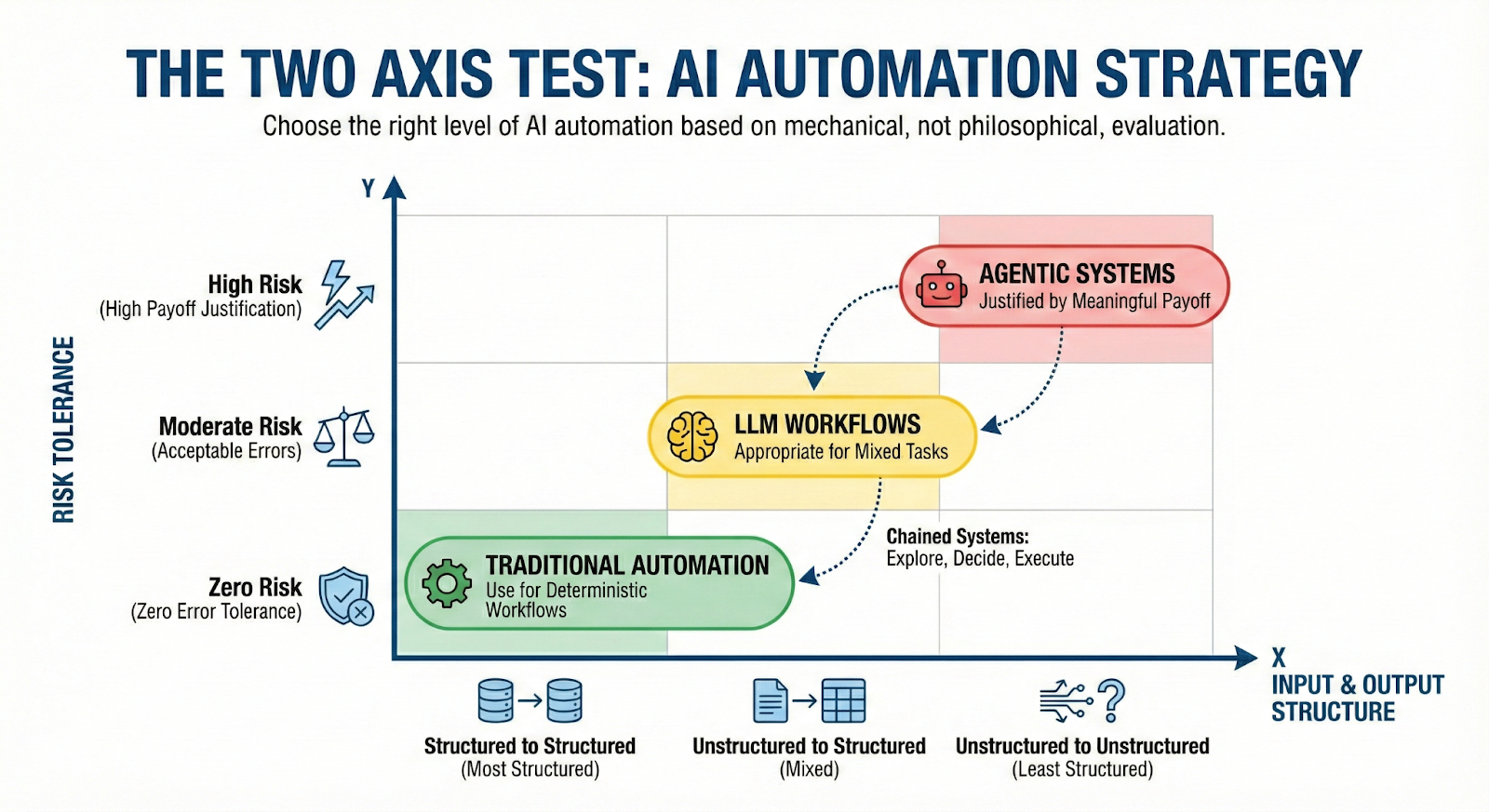

The Two Axis Test: Structure Plus Risk

Choosing the right level of AI automation is not philosophical. It is mechanical.

Before evaluating any vendor, ask two questions.

X Axis: Input and Output Structure

- Is this structured to structured?

- Unstructured to structured?

- Unstructured to unstructured?

Y Axis: Risk Tolerance

- Is zero risk allowed?

- Is moderate risk acceptable?

- Is high risk justified by payoff?

As our co-founder puts it:

“The next thing to layer on top of that is, what is the level of risk that I can take with this workflow?”

If risk is zero, stay with traditional automation.

If risk is moderate, LLM workflows are appropriate.

If risk is high and the payoff is meaningful, agentic systems may be justified.

Many successful systems chain these levels together. An agent explores, then hands decisions off to deterministic automation for execution.

How to Evaluate AI Automation Vendors Without Getting Burned

When buyers skip evaluation discipline, mistakes happen. Use the following checks in every AI vendor conversation.

1. Can They Clearly Explain the Three Levels

A serious vendor can explain:

- Why one level fits your use case

- Why another level does not

- The tradeoffs involved at each level

If everything is described as “agentic,” that is a red flag.

2. Are They Honest About Risk Versus Payoff

Risk should never be hidden.

As our co-founder explains:

“The only reason you would take that risk is there is a payoff.”

A trustworthy vendor can articulate what could go wrong, why the risk exists, and what benefit justifies it. If risk is downplayed or ignored, walk away.

3. Can They Recommend Less AI

Strong vendors say no.

They will tell you when traditional automation is the correct answer and will not force AI where it does not belong. The inability to recommend simpler solutions usually signals immaturity.

4. Can They Explain Where Human Control Lives

Ask where guardrails, overrides, and fallbacks exist. If the answer is vague, accountability will be too.

5. Do Outcomes Match the Risk Taken

Every increase in autonomy should come with a clear payoff. If the risk is high and the benefit is marginal, do not proceed.

Final Thought

The teams winning with AI are not chasing novelty. They are disciplined.

As our co-founder summarized, the competitive edge now comes from getting every micro moment right. That starts with choosing the correct level of automation and being honest about the tradeoffs.

If you want AI that actually improves revenue outcomes, start by choosing the right level of automation for the problem you are solving. One clear decision can prevent months of cleanup later.